The best way to spot AI is also the easiest

You should learn this future-ready AI spotting method now.

How to make AI spotting easy

Finding AI videos is hard, but finding AI accounts is easy. Anyone can do it with some basic knowledge about how AI media has advanced over the past two years.

I often joke about how many beautiful 24-year-olds discovered Instagram for the first time in November of 2025. Google’s Nano Banana Pro was released November 20th, and a rush of fake people registered. Let’s turn this into a practical way to find fake accounts. Put a pause on finding individual AI videos and AI videos; it’s more efficient to find the AI accounts, then work backwards.

In this piece we’ll plot out the timeline for recent advancements in AI generation and how new accounts and videos coincide with each change.

The Account Age Paradox

It’s obvious to us that an iPhone 17 Pro’s camera looks better than the camera from the iPhone 6S. 10 years of technological advancement separates them. But in 2015, a new iPhone 6S produced photos notably better than the HTC One M7 I had at the time. That HTC One took the earliest photos in my current photo library, and I look back on them fondly.

The same cannot be said of an AI creator in the year 2035, who is trying to prove their character is real. Can they look back at the old, Veo 3 AI generations from 10 years ago to establish proof of life? Of course not - it has the opposite effect, because Veo 3 looks awful and obviously AI to people in 2035. This is a huge difference between AI and real media, and one that AI creators are already aware of.

On Instagram, relevant AI accounts are usually just a few months old and pop up with AI media innovations. When I find a suspected AI character on TikTok, which unlike Instagram or YouTube does not make “account age” visible on the app, I immediately scroll down to their earliest posts. Did they leave the old, bad generations, or did they delete them and start over recently? Either are huge red flags and nearly impossible to overcome.

Putting it into action

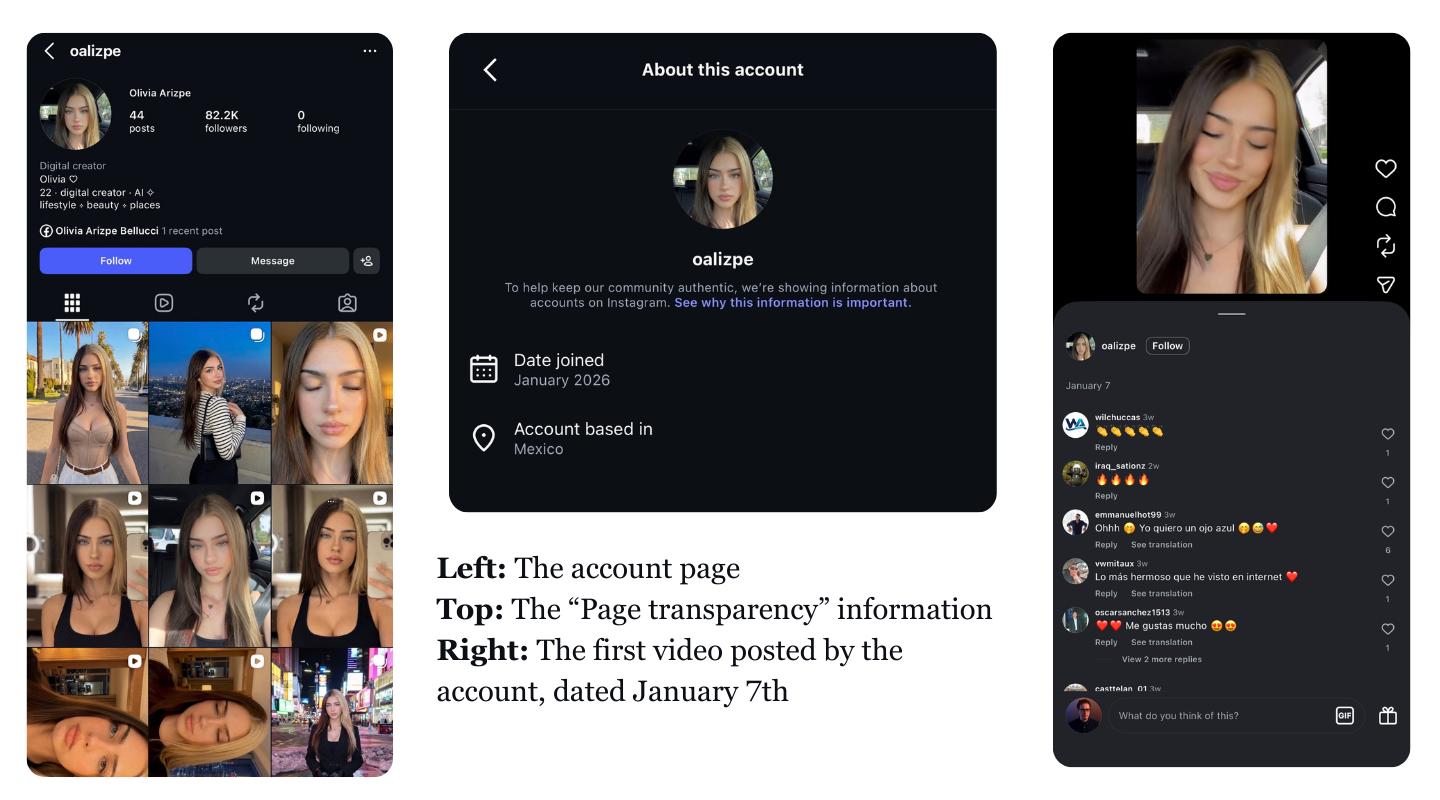

I just found a new account on Instagram: an AI influencer named Olivia Arizpe. First of all, her bio says “Digital Creator” and “AI” in it, but let’s pretend the creator wasn’t so honest.

First, I’ll tap on the three dots at the top of the account page to pull up “About this account.” Here I see that the account was created in January of 2026. The first video is also from January of 2026. This video shows the AI avatar lip-syncing to a Tame Impala song. This is follows “motion control” trend I’ll talk about later. That’s all the information I need to confidently call this an AI account.

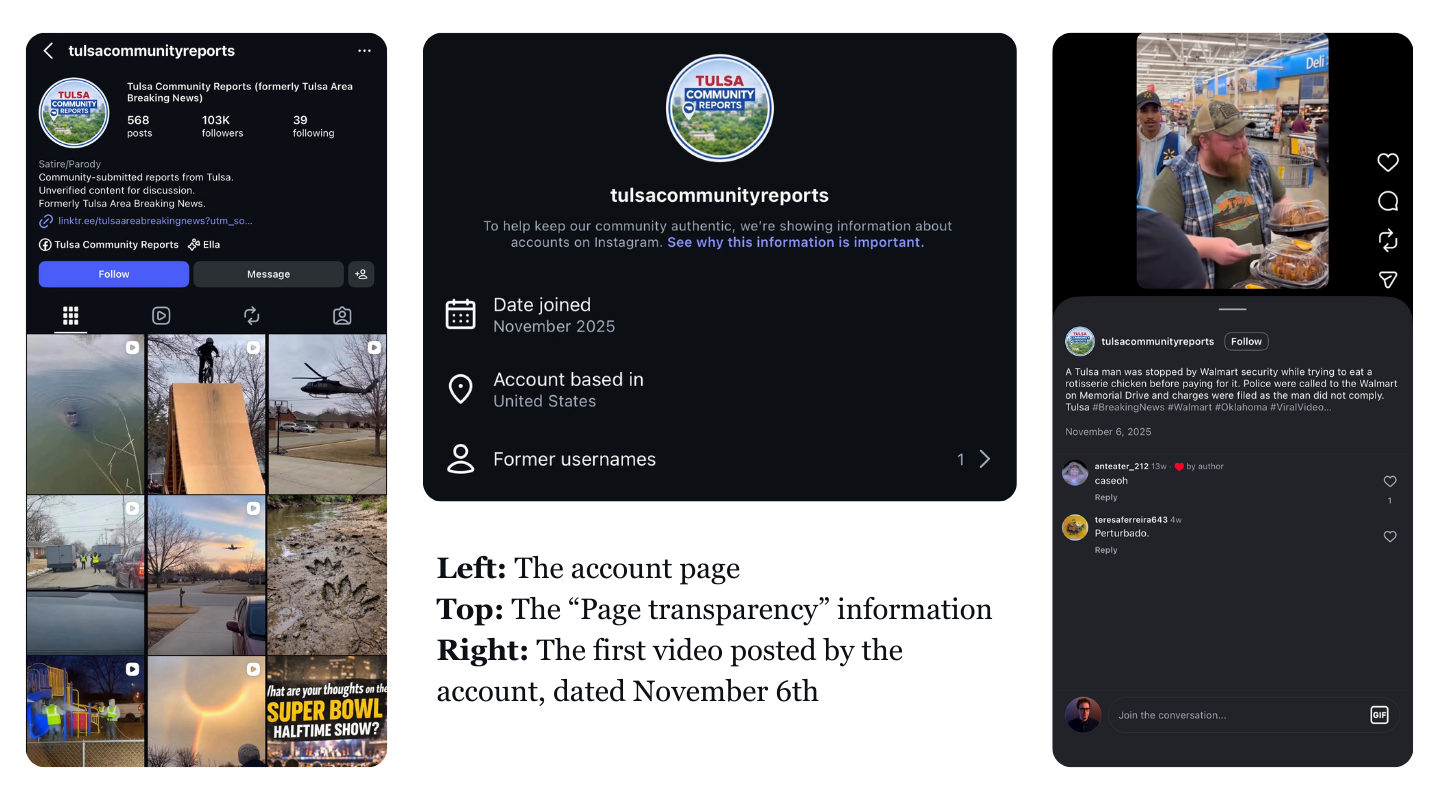

Or what about this account, a “News” page that claims to be from Tulsa, Oklahoma?

This page used to be called “Tulsa Area Breaking News”, but it’s just an AI slop page. They started in November, and their earliest available video is from the same month. This follows OpenAI’s release of Sora, and indeed there are Sora watermark scrubbing artifacts. Not to mention, their first video follows the format of the AI-generated EBT videos coming out at the time, and those were almost entirely generated with Sora.

None of this required good eyesight or any pixel peeping. And if any shiny, new AI slop comes out of the AI-generated Tulsa area and they really want to trick you, they’ll have to delete the old stuff.

We dive into how to find account transparency information in this article, by the way. It contains instructions for Instagram, Facebook, YouTube, TikTok, and X.

Granted, it’s unreasonable for you to remember that Sora came out in October, or that Motion Control AI generators were trending in January. Luckily, you don’t have to remember: I wrote it all down below for you to reference any time you need.

The practical AI release timeline

When coming across an account we suspect is AI-generated, we’re looking for either:

A relatively new account that appeared and immediately implemented some recent technology

An account that shows signs of older or more affordable AI technologies in older posts

Let’s look at previous major advancements in AI media and how those popped up on the Internet. This will not only demonstrate how new technology changes trends on social media, but also what to look for when looking back at old posts. This will also give us some important cutoff dates. For example, if you see a dance video from 2023, you can be sure it wasn’t AI-generated.

Before 2025

2025 will probably go down as the most pivotal year in AI media history, and most of the AI-focused accounts today don’t use models from 2024 or earlier. But there are some notable exceptions you should be aware of.

Until Late 2023 - Deepfakes and AI avatars

In 2018, deepfake technology was creating memes and porn, but it was very much in the experimentation phase. Deepfakes aren’t AI videos — they use a different tech architecture — but they’re the first popular form of “AI video” in its broadest possible definition. The corporatized versions of deepfakes are usually called “Avatars”. These are made by companies like Synthesia, who were already around by 2020, and companies like Heygen joined the mix later.

Important takeaway: Face-centric “AI videos” were already possible before 2023, but their uses were pretty limited.

Late 2023 to Early 2024 - AI Image slop starts hitting social media

A lot of Facebook posts still have I would call “classic” AI slop. These are the images created by technology from late 2023. For example:

OpenAI released DALL-E 3 in October of 2023

Midjourney released V6 in December of 2023

While these are just two of many image generators of this era (and OpenAI is depreciating the DALL-E 3 API shortly), there are many accounts still stuck in this era because it’s cheap! These sorts of images became ubiquitous when OpenAI integrated DALL-E into ChatGPT, lowering the barrier of entry and opening the AI slop flood gates.

This is the era of Shrimp Jesus and “engagement slop” like old, disabled puppies asking for donations, or ragged puppies begging for likes. Today, we still see NFL coaches who desperately need help with medical bills. They don’t look like the coaches exactly, there’s a yellowish tint, and it’s giving “Polar Express.”

Important Takeaway: If you see a decent image from before mid-2023, its foundation is probably a real photo. Maybe it’s a heavily edited real photo, drawn, or 3D rendered by this era’s already incredibly powerful animation or gaming engines. But it wasn’t made by “AI”.

Early to mid 2024 - First (decent) AI Video models released publicly

Sora got a lot of early attention February of 2024, followed by models like Luma Dream Machine and Runway Gen-3 in June that year. AI video was still relatively limited, but boosted by many new scaling and training advancements from 2023. Given its limitations, memes and AI video slop was their primary social media use.

Important Takeaway: If you see a decent-quality video posted before mid-2024, unless it was professionally modified or rendered, it’s a real video.. There aren’t any good “AI videos” before this point.

2025

March - ChatGPT 4o Images

I still remember sitting on the couch with my wife on March 26th, 2025 - the day after OpenAI released ChatGPT’s 4o image generation. The internet was lit up with Studio Ghibli-ified images. Feeling guilt about the inherent ethical quandaries of that style, I instead made a photo of our recent vacation in the Family Guy art style. Our surprise and fear from that day seems pretty quaint in hindsight, given what else 2025 was about to bring.

AI-generated profile pictures from this era are still common. The yellow-tinted, glossy, evenly-lit photos from this generation are ubiquitous. Since ChatGPT is the most popular large language model, its built-in image generation is also really popular.

May - Google Veo 3

Veo 3 was the first AI video model with meaningful sound, and it was also a jump forward in video quality. It generated videos with a cinematic look and feel, though it definitely wasn’t up to cinematic standards. As a result, though it was envisioned as a tool for creatives, it instead flooded the internet with AI videos.

A Veo 3 Review was my first ever YouTube video. Today, Veo 3 makes a platonic “AI video” that’s relatively easy to spot after you’re familiar with it. The most distinctive characteristics include smooth and even lighting, temporal inconsistencies and background issues, and the character’s robotic and melodramatic voices.

Immediately in May, a ton of AI slop pages popped up on social media. Since Veo 3 could only do widescreen 16:9 videos at first, vertical-native platforms like TikTok suddenly had a ton of new accounts posting AI videos with black bars (also known as letterboxes) on the top and bottom of every video. ASMR Fruit cutting videos and AI man-on-the-street interviews were trend. But also, people’s first “I got tricked” moments came with Veo 3’s bunnies on trampolines.

Important Takeaway: If you find a realistic video with matching sound or dialogue posted before May of 2025, unless it was generated with a game or animation engine, it’s a real video.

Mid-2025 - Other video models

Around the same time as Veo 3 were Runway Gen 4 (April) and Kling 2.1 (May). These were similar in video quality to Veo 3, but neither had synchronized sound. They traded this for vertically-native videos and different video styles. Along with Midjourney releasing its first video model in June, Alibaba’s open-sourcing of Wan 2.2 in July, and many more advancements from companies like LTX and Minimax, a plethora of good video-generation options came online in mid-2025.

With these vertical-native models, we got AI-generated landscapes, fake natural phenomena, and AI-generated cartoons for kids that were NOT kid-friendly. But this is also when AI slop started looking realistic enough to fool a ton of people in vertical feeds. By August this is almost all I covered.

August - Nano Banana

Google’s Nano Banana, the informal name for Gemini 2.5 Flash Image, was a big jump in photo quality. Before Nano Banana, mainstream AI photo still had a glossy look. After Nano Banana, photorealistic images were very accessible.

Important Takeaway: A lot of photo-only AI accounts got a huge boost or started in August of 2025.

October 2025 - Sora 2

The next jump in AI video came from OpenAI, who took a year and a half after the original Sora to release the Sora 2 video model. Alongside it was the release of the Sora app, a TikTok-style vertical video app with only AI videos.

The Sora 2 model had a few key innovations:

It felt less uncanny than Veo 3 for many viewers. Eyes, mouth, and skin detail were more realistic.

It had better physics than any other model at the time.

It was funny. Lazy prompts were spiced up by a language model in the background. This meant inexperienced AI prompters could make viral videos.

And yet, it was a very noisy model with a heavy “AI accent.”

Sora’s release coincided with a huge increase in the number of “AI slop” accounts because it was free. Until this point, good AI video generation was expensive, but the Sora app let users generate a lot of videos in the free app. These videos could be downloaded with a watermark (that was easily scrubbed), then reposted to the other, much more popular social media sites. The Sora 2 API released just 2 weeks after, providing videos without watermarks, and giving more people access to the powerful Sora 2 Pro model. To this day, a ton of AI videos come from Sora 2 because it has a good price-to-quality ratio.

Important Takeaway: Many AI video slop accounts have October 2025 birthdays or changed their usernames at this time.

November 2025 - Nano Banana Pro

Google’s jump to Nano Banana Pro (Gemini 3.0 Pro Image) was surprising. Just three months after the original, it brought big improvements to realism and quality, as well as improved spacial reasoning and text rendering. This is the point where AI photos became mostly undetectable on first glance, though they can be spotted when looking closely.

Along with Nano Banana Pro came the release of SynthID, which can be accessed through Google’s Gemini large language model. It’s an invisible watermarking system that embeds and detects a watermark hidden inside the pixels of a photo.

Creators reacted accordingly. Misinformation like the infamous “Bubba Trump“ photo and fake celebrity paparazzi photos spread wildly. More relevant to our everyday social media use, AI generated influencer accounts proliferated in late November. The Nano Banana Pro release also corresponded with TikTok’s unfortunately-timed new emphasis on image carousel posts. And, since many AI video generators have a photo-to-video mode, these AI images are the starting frames for many AI videos. Nano Banana Pro is still a leader in this field, and while other generators have caught up, this was an important demarcation point.

And that wasn’t all that happened in November...

November and December 2025 - Motion Control AI improvements

Coinciding with Nano Banana Pro’s release were improvements in Motion Control models, most notably Wan 2.2 Animate. This prompting innovation lets creators take a “control” video — often a video stolen from a real creator’s social media — and “replace” them with a new character, real or not. This method saw further improvement through Kling 2.6 Motion control.

These models unlock very realistic motion and physics for AI characters. Combining Nano Banana Pro (or similar photo models) with a motion control video model lets creators generate realistic, consistent characters across posts. While not perfect (there are still plenty of AI giveaways), AI influencer creators now had a ton of tools at their disposal.

Important Takeaway: Accounts that feature human avatars and started or rebranded in November or December of 2025 are a huge red flag. A lot of AI slop accounts moved into the more profitable AI influencer business.

Early 2026 trends

Releases from Kling and Bytedance show further improvements in AI video quality, which we’re still analyzing as of this post. I expect some new accounts in February that play off their strengths, but those are yet to be determined. Right now it’s a bunch of demos of stolen intellectual property and celebrity deepfakes, as can be expected with a new model release.

Moving Forward

People regularly ask me what might happen if AI video becomes “perfect” or “undetectable.” But to my eyes, real videos aren’t “perfect”. There are always artifacts of the process that made them.

I remember watching Superbowl XLV 15 years ago, on an imposing 75-inch high-definition TV. It was incredible and perfect at the time. But looking back at it now, it looks a bit dated, even without today’s de-interlacing conversion artifacts. In 5 years, perhaps the oversaturated HDR look of today’s iPhone phones will be an out-of-fashion artifact of our current era.

The highest-end, most impressive AI video generations today still don’t look "real” to me, but they do look “really good”, which is real enough for most people. The difference is marginal, but with hindsight it may become obvious. Those of us who make real media will have to adapt and differentiate ourselves from AI advancements, figuring out what our advantages are. It’s always going to be changing, so stay tuned here for updates on the latest releases and countermeasures.