Sexualized AI videos of kids create real problems on Instagram

How they implicate real underage girls, and what the tech industry can learn

This report includes descriptions of sexual content involving both children and adults. There are no photos or videos included, but the topic and descriptions can be upsetting. Reader discretion is advised.

Since late December 2025, I’ve uncovered a staggering amount of AI-generated, sexualized videos using the likenesses of young girls, all posted to Instagram. It’s not just happening in the shadows:

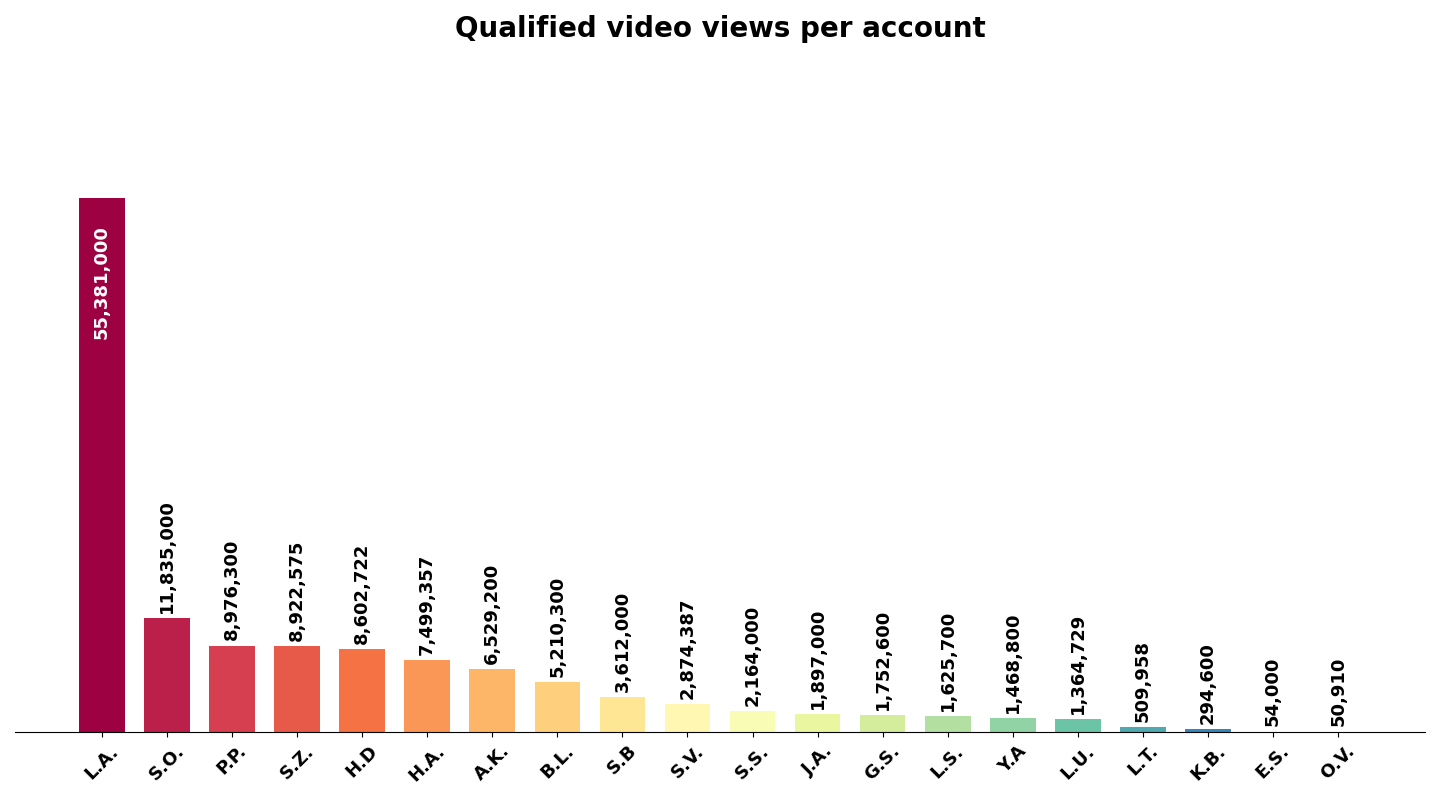

As of January 27th, Riddance tallied over 130 million views attributed to just 144 sexualized AI videos showing underage girls’ likenesses.

This includes over 10 million views on videos that we classified as “egregiously sexual.”

There are likely hundreds of millions more views on the fringes that we didn’t count.

I was horrified to discover an algorithmic pipeline that started with sexualized AI adult content, transitioned to sexualized AI content with young girls, then devolved into showing real underage girls - often posted by their parents - on a pedophilic feed of AI-generated and real sexual content.

What’s especially troubling is how accessible and rampant it is. The accounts and videos surfaced in this investigation are a startling example of how AI content can exploit and thrive on short-form video algorithms with no capacity to mind the moral consequences of its creation, proliferation, and consumption.

First, I’ll describe the video format and why it’s so troubling. Then, I’ll describe how I found these videos, demonstrating how easy they are to find. We’ll cover their impact, the rules that should prevent them, and why we know they’re part of (and an introduction to) a pedophilic algorithm that implicates real kids. I’ll conclude with our theory of why this is difficult to solve, and likely to worsen without serious course-corrections from the AI and tech industry.

The video format we tracked

The main video format we’re concerned with has two clips, edited together, usually around 10 seconds total in length:

An AI-generated clip with a photo or video of a young girl

An AI-generated clip of the same person, now an adult, in a sexually suggestive video, typically wearing a bikini or lingerie

Here’s a real example:

A young girl sits on a driveway, cross-legged, in front of beige siding and an American flag. She holds a banana in her right hand, purses her lips, squints, and makes a goofy face. The caption says “2019”. Sudden cut to a “2025”. A brunette woman with similar features, now joyfully flowing to music in a car wearing a scant pink bikini. The reel loops.

That’s an example of an “egregious sexual” video; one where a child holds a phallic object or is in an explicit pose. This is a minority of the content; remove the phallic object, and that’s what most of them are. Every video that follows this format is sexually suggestive and troubling, even if we don’t determine it to be “egregious.” More on that later.

Individually, neither the half of the video format is worse than “PG-13” rated. But it’s disturbing to come across these with normal, human context awareness and common sense. And what’s worse is that Instagram reels loop after they’re done. So, you’re watching a sexualized adult woman, then boom - you see that same woman as an underage girl when the reel restarts.

For this reason, we included videos with three to four segments under this format. For example, some videos show a girl “growing up” into an adult. A 10-year old girl wearing a nurse costume cuts to age 14, then age 16, then age 25 - now in tightly fitting scrubs and twerking. The video loops.

We’re not showing screenshots or videos, nor linking to them anywhere. But it’s not just me; I reluctantly showed a few videos to willing friends, family, and colleagues who wanted to understand what I was working on. There was unanimous shock and disgust.

What led us here?

In June of 2025, I started creating videos about AI and media literacy at @ShowtoolsAI on YouTube, Instagram, TikTok, and (occasionally) Facebook. From casual to serious topics, I address relatively harmless AI slop, how AI generates disturbing kids videos, and generally try to help people distinguish AI from real media. I hope that my perspective on AI literacy contributes to a larger media literacy toolkit.

By November, I wanted to spend more time on long-form reporting and investigations. I needed a written outlet decoupled from video platforms and their incentives, because discussing AI-generated sexualized content, from PG-13 rated influencers to AI porn, isn’t appropriate for short-form video (my main format until now). TikTok appropriately penalizes even mildly suggestive content. And, frankly, I didn’t think that viewers would appreciate being along for this ride.

So it’s no coincidence that this piece coincides with the release of Riddance, a platform about AI literacy, the impact of AI media, and figuring out what’s real on the internet. This investigation instilled an intense urgency around launching this website.

It’s extremely easy to find sexual AI videos

Just like real sexual content creators, AI sexual content creators have accounts on social media sites like Instagram. Their accounts host pictures and videos of their clothed selves, real or not, to comply with content moderation guidelines.

These creators use their bios to direct followers to porn sites, like OnlyFans or Fanvue (a.k.a. “AI OnlyFans”), where they sell their explicit stuff. The distribution and consumption of AI porn isn’t the focus of this piece, but its demand and financial rewards are the main incentives for people who create these AI-generated personas.

There’s a financial reward for edgy, unique, or exploitative sexual content that regular humans can’t or wouldn’t create. Real porn actors, from those who make fetish material to very edgy sexual videos, would not think to do what AI creators are doing right now, because it’s humiliating, disgusting, and probably illegal. That’s AI porn’s advantage. The people on camera bear no burden of shame or responsibility. Their human creators stay anonymous, and that creates awful incentives.

In November, I created a video showing how body-replacement AI generators give AI accounts the technology to re-skin real, stolen videos with sexualized characters. These AI generators include Alibaba’s Wan 2.2 Animate or Kling’s 2.6 Motion Control. After posting that video, I received heartbreaking messages from real women who had videos stolen and sexualized by AI accounts selling AI porn, using their bodies non-consensually. Importantly, none of these real women are involved with porn, or have any desire to be; they’re beauty and lifestyle influencers.

That was the first topic I wanted to cover: How AI-generated sexual content is created with assets of non-consenting real people. I started researching by tracking offending AI videos on Instagram.

Why Instagram?

People send me a lot of videos, usually asking for my judgement on if some video is AI-generated. But it’s also common for people to send me AI videos that just trouble them, either on a personal or societal level. On TikTok, that’s usually fake AI influencers selling supplements. On Instagram, it’s usually AI-generated sexual content creators.

TikTok, YouTube, and X have sexual content as well, right? Yes, but:

TikTok is relatively restrictive of sexual content. Anecdotally, videos on my pages that show an AI woman in a modest swimsuit reach far fewer viewers on TikTok than on either Instagram or YouTube. My coverage of mature themes has performed well on Instagram because it permits the content in the first place.

YouTube shorts is an offshoot of YouTube long-form videos, and while it’s extremely popular, influencers and adult creators focus on Instagram and X.

X openly hosts porn. Grok creates sexual imagery of adults and minors. Adult creators post directly to X. This isn’t news, and it’s not my focus. I started working on this piece before X released what I believe is the single worst feature a tech company has ever released. They brought AI-generated child sexual abuse material [CSAM] straight to social media.

The road to “Rock Bottom”

I started tracking AI-generated adult content pages on Instagram, not by “following” the accounts, but by privately “saving” their notable Instagram Reels. Dumbly, I did this on my main professional account, where I store a huge library of AI-generated videos for reference. At first, the AI personas were mostly “traditional-appearing” AI-generated women.

But in early December, there was a startling uptick of AI-generated fetish content. In a tongue-in-cheek short video, I analyzed one of these accounts: an AI woman who fetishizes incredibly dark skin. I decided there wasn’t a responsible way to cover the women with two heads on one body, or the woman with three breasts, in video format.

I think AI personas are still pretty detectable for anyone with good media literacy and critical thinking skills. And yet, l don’t know how many followers of AI influencers - those that advertise astrology apps or sell videos on Fanvue - are actually aware that these characters are AI. I really hope that anyone following the two-headed woman is fully aware that she (they?) aren’t real. But, what about the impossibly pale AI porn actor?

I didn’t clock it at the time, but as I tracked these accounts by saving their posts, something bad was happening under the surface.

A “normal” AI adult character may be AI woman “on the job.” For example, an AI-generated police woman who sells videos on Fanvue. They may post a video following this format:

A clip of them as a kid, dressed up as a police officer

Cuts to them as a young adult officer in training

Cuts to them as an adult police officer wearing an impractically tight uniform

A human creator is trying to make their fake AI persona feel more real. They’re showing life from before their impostor started posting. Many of these accounts popped up in late November, alongside the release of Google’s Nano Banana Pro image generation model. It’s crazy how many attractive twenty-somethings just happened to discover Instagram at that time. But, the “growing up” format was common for safe-for-work AI accounts, too. It’s also popular for real people posting nostalgic videos (more later on why you really should not do this).

But with hindsight, there were immoral creators taking advantage of this format and pushing it to its limit. On December 25th, I came across the first example of the format that troubled me so much, I had to take some time off to process it. What had I done to get this? How big was the problem?

How Riddance conducted this investigation

Allow me to break the fourth wall: This section is in-the-weeds of OSINT (Open-Source Intelligence) techniques. If you catch your eyes glazing over, skip ahead to the next section. But, for both transparency and further investigation, we decided to include it.

I found 11 offending videos on my main Instagram account between December 25th and 28th. After realizing the severity of the trend, we spun up a system to capture similar videos securely and responsibly. Our goal was to capture the scope of the problem, trace algorithmic ties between videos and accounts, and limit personal exposure as much as possible.

While a deep dive on our techniques is available in this post, here’s a summary how of we ran the investigation:

While using residential proxies with randomized IP addresses, including one outside of the USA, we created (3) Instagram accounts: The first on January 9th, the second on January 21st, and the third on January 23rd. Each account used different demographics and names.

We accessed Instagram on desktop browsers, though isolated Google Chrome profiles with Riddance’s in-house “Session Recorder” Chrome extension activated, which kept logs of our activity. After our first account tests between January 9th-15th, we started seeing videos with real minors. Understanding the significance,, we screen recorded the account creation and browsing processes for the second two accounts for more evidence.

After account creation, we “seeded” accounts by navigating to 10 offending videos, watching them all the way through, engaging with them by either liking or saving them, and following the offending accounts. We proceeded to browse and allow Instagram’s suggestion algorithms to present us with new content. We scrolled the Instagram home page and interacted with only those videos that met the criteria of “AI sexualized video including a minor’s likeness.” When encountering an offending video, we sometimes clicked on the account to verify that they consistently posted only AI-generated material.

On accounts (1) and (3), we saved offending videos and followed the accounts. On Account (2), we “liked” these videos and followed the accounts. As expected, “saving” videos encouraged more similar recommendations more quickly. “Liking” them yielded similar videos, but took more time.

Account (1) logged 40 minutes of browsing and interaction before stopping. Account (2) logged 41 minutes, and account (3) logged 22 minutes.

Browsing concluded when we hit “Rock Bottom” (described later) on all three accounts. After this, we revisited the saved videos and followed accounts, reported notable offending videos, and double-checked that we were only accounting for AI videos.

Finally, we combed through the most common and notable accounts to gather data and view counts on any qualifying “AI sexualized videos including a minor.” The 144 videos we collected were not all viewed during the browsing period, but were collected to assess the wider impact of the trend.

Some further, essential notes about our process:

Browsing sessions were kept short to limit exposure and personal impact, logging no more than 30 minutes at a time and spaced out among days.

All account and browsing was done by a human. We don’t use automation tools because human judgment is needed the entire time. We comply with Instagram’s Terms of Service, and we need to avoid any Instagram-side red flags raised by suspicious automation tools. As a result, browsing and collecting data was tedious.

We collected video URLs, account names, view count data, browsing session logs, screen recordings, and videos for reference. This data is only shared with professional collaborators, Meta, and the National Center for Missing & Exploited Children. We encountered no explicit CSAM, but have taken security and reporting seriously regardless.We reached out to Meta for comment and have not received a response yet.

The statistics

While a ton of AI-generated accounts start up every day, we tracked the most commonly encountered accounts and videos from our three tests. We anonymized twenty of them and ranked them by the view counts of offending videos.

In total, we qualified 20 Instagram accounts with 144 offending videos accumulating 130,625,138 views total.

Notes: As of February 10th, account “A.K.” was offline. Instagram has a variable rounding error depending on the view count. For example, a video with 12,315,239 views displays as “12.3 M”, while a video with 4,467 views displays the entire view count. We used the rounded numbers, because again: we did this all manually.

Of the 144 videos, 19 of them classified as “egregious sexual”, accounting for 10,353,200 views.

Many accounts had one or no “egregious sexual” videos, but these egregious videos seemed to help out accounts that dared go there. “H.D.” had seven, “L.A.” had two, and “S.O.” had two. These are three of the top five accounts by total views.

These numbers aren’t meant to demonstrate the entire scope of these videos across all of Instagram. We could kept finding more videos and accounts, but to what end? Furthermore, we only counted videos that were unambiguous. This means we only tallied videos that:

Started with a girl who was clearly under age

Mentioned their age was 17 or younger in the caption or a graphic

Started with underage girl(s) only, or a girl with an adult man.

If the first frame had the underage girl with her mom or grandma, or if the girl was ambiguously teenaged, it didn’t count. If we had counted every video that included an underage person at all, the numbers would have at least doubled within these 20 accounts alone. We could have done that, but we determined those videos were less clearly violating Meta’s community guidelines as they’re currently written.

Hitting “Rock Bottom”

When we started this investigation, we hypothesized that there was an abundance of AI-generated accounts using the offending video format. The theory was: at some point, our feed would become saturated with AI-generated sexualized videos with the likenesses of underage girls. We would then report these videos to Meta, write a story about their reach, and move on to other investigations. We knew this would be unpleasant, but our capture tools and media expertise gives us an edge in finding AI videos.

We didn’t know “rock bottom” existed.

As I mentioned earlier, we used three test accounts for browsing and collecting data. We stopped testing whenever we hit “rock bottom” with each account. “Rock bottom” is the when the recommendation algorithm reliably and repeatedly suggests posts with real underage girls. Some of these accounts are run by girls themselves, but most are family accounts run by parents. None of their posts are sexual, nor is there evidence that they willingly or knowingly appear in pedophilic algorithms.

Once a feed’s balance becomes:

40-50% AI-generated sexual content, most of it with underage AI girls,

30-40% real sexual content,

10-20% real underage girls, out of context but surrounded by sexual content,

5-10% random videos, advertisements, apparently unrelated to the rest of the content,

we’ve hit “rock bottom.”

And that’s when we stopped the tests. The journey to rock bottom is already horrible; I don’t want to spend any time there.

We originally intended to use just one account to capture AI videos. But once we discovered “rock bottom”, the story changed. We decided to replicate the test with multiple accounts to see if getting to “rock bottom” was a common outcome that we could responsibly report. Indeed, we saw many of the same AI creators, and real girls, on all three of our test accounts.

We can apply a broad understanding of recommendation algorithms to understand why this happened. The algorithm does not “detect” that the content depicts young girls, nor does it explicitly “try” to show more. Instead, the algorithm correlates the viewing habits of the test accounts with other pedophilic viewing habits, and serves new videos accordingly. Most viewers don’t discriminate between real and AI videos like we were trying to, so the algorithm served us real videos anyway. We did not interact with the real videos.

Instagram’s Policies and Content Moderation

Meta has policies that should restrict the offending AI videos from Instagram. I’ve put polices that they appear to violate in bold. First of all:

DO NOT POST:

Child sexual exploitationContent, activity, or interactions that threaten, depict, praise, support, provide instructions for, make statements of intent, admit participation in, or share links of the sexual exploitation of children (including real minors, toddlers, or babies, or non-real depictions with a human likeness, such as in art, AI-generated content, fictional characters, dolls, etc).

This seems straightforward: “AI generated” child likenesses are not allowed in sexual contexts. This is in accordance with laws banning AI-generated Child Sexual Exploitation Material (CSAM). The policy continues:

This includes but is not limited to:

[Sexual intercourse policies]

Children with sexual elements, including but not limited to:

Presence of aroused adult

Presence of sex toys or use of any object for sexual stimulation, gratification, or sexual abuse

Sexualized costume

Staged environment (for example, on a bed) or professionally shot (quality/focus/angles)

[Other bullet points not relevant to this topic]

Maybe I’ve mistakenly applied an overly broad view on what these policies are for. If that’s the case: it’s time for some new policies.

Optimistic that maybe no one had thought to report these videos to Instagram yet, I reported 23 videos during testing. Only one was taken down. Six others were “Limited to viewers above 18 years old,” and the rest were left as-is. The one that was taken down was an “egregious sexual” video, but it was similar to the others I reported. And, to be clear, the “egregious sexual” videos may just add the “presence of sex toys or use of any object for sexual stimulation.” Every other video that I tallied violated the rest of the highlighted material.

What to do about it

Recommendations for you, the reader

Don’t post photos or videos of real kids on social media.

Some of the real posts we came across are from small accounts with less than 2,000 followers. Others were from large family accounts with over 500,000 followers. This includes nostalgic videos. A video from a mildly well-known real creator who a posted her childhood highlights showed up on accounts two and three.

The dark irony is that AI video companies like Kling have AI models that specialize in face or body control. You can submit a photo of a kid to make them do a “cute baby dance” or “expression challenge,” both viral dances. Seven of their twelve examples show kids or babies - it’s a focus of theirs. They’re using their technology to enhance kids’ likeness on social media, while ingesting these real kids photos into their training data for future AI models.

Recommendations for Meta:

Hopefully, Meta investigates offending accounts (which we provided to them) and interrogates the patterns that create this pipeline. While we acknowledge that moderating around 100 million photo and video posts per day is daunting, here are two concrete suggestions that we believe could mitigate harms in the future.

First, focus on moderating the most notable offending accounts through algorithmic capture. Disincentivize accounts who exploit the algorithm by “decapitating” the top offending accounts. As we’ll discuss in the next section, AI accounts are usually algorithmic wave-riders. Removing the most prominent accounts and videos not only disrupts the wave, but disincentivizes others from trying to ride it. But, you need white-hat, human auditors hopping on the wave for you, because automated tools lack the appropriate judgement.

Second, funnel more resources into third-party auditing and moderation. Great examples include Take It Down, a third-party collaboration between Meta and MCMEC, a platform “for people who have images or videos of themselves nude, partially nude, or in sexually explicit situations taken when they were under the age of 18 that they believe have been or will be shared online.” Meta works with other third-party vendors to weed out nuanced content, and the findings in our investigation should fall in that category.

We believe incentives for platforms and watchdog organizations are aligned on this issue, and given the obvious and extreme harms of CSAM, as well as the pipelines leading to it, we’re hopeful for rapid progress.

Gaps in AI and algorithmic accountability

This isn’t just an Instagram problem. We reflected on the systemic failures I encountered throughout this investigation. The problem is on track to worsen as AI media generation improves and more AI content works its way to social media.

The fundamental, high level problem is that there are no good methods for enforcing moral responsibility at points of contact between two or more autonomous systems at scale.

Companies making generative AI video models should do everything in their power to limit problematic content. But, a creator with bad intentions only needs to stitch together two AI videos that are potentially unproblematic in isolation to make content that is disturbing and downright harmful. If these videos were shot and edited by humans, many of them would be appropriately rejected, or not considered in the first place.

But the most sinister problem lies in the feedback loop between algorithmic engagement and automated, AI-generated content workflows.

There’s an explosion of automated AI accounts that operate with as little human input as possible. The topic and style of their videos is determined by the performance of other videos, either from the same creator or from channels the creator wishes to emulate. By indexing on well-performing videos, these creators automate prompt workflows to generate similar content, in hopes of riding the algorithmic wave.

The creators themselves have little interest in the actual content; they optimize only for engagement. If and when there is no human in the loop, the model-level guardrails are blind to the higher-level abuses they’re participating in when optimizing for views. There are no human editors, actors, or employees with the indispensable capacity to look outside of the scope of their specific role and step in and say “no, I will not be a part of this.”

Until AI and social media companies can effectively collaborate to prevent risks systematically, or regulators force them to do so, it’s imperative that each individual company over-indexes on safety and act with an abundance of caution. At this moment, it’s important to interrogate why AI-generated kids are appropriate or necessary at all.

Special thanks to Mason Broxham (editor and Riddance co-founder), Drew Harwell (editor), and Eli Broxham (illustration).